Publications

You can also find my articles on my Google Scholar Profile.Research Topics:Show selected / Show all by date / Show all by topic

Apprenticeship learning

| Manipulate by Seeing: Creating Manipulation Controllers from Pre-Trained Representations Jianren Wang*, Sudeep Dasari*, Mohan Kumar, Shubham Tulsiani, Abhinav Gupta (* indicates equal contribution) 2023 International Conference on Computer Vision (Oral) [Project Page] [Code] [Abstract] [Bibtex] The field of visual representation learning has seen explosive growth in the past years, but its benefits in robotics have been surprisingly limited so far. Prior work uses generic visual representations as a basis to learn (task-specific) robot action policies (e.g. via behavior cloning). While the visual representations do accelerate learning, they are primarily used to encode visual observations. Thus, action information has to be derived purely from robot data, which is expensive to collect! In this work, we present a scalable alternative where the visual representations can help directly infer robot actions. We observe that vision encoders express relationships between image observations as \textit{distances} (e.g. via embedding dot product) that could be used to efficiently plan robot behavior. We operationalize this insight and develop a simple algorithm for acquiring a distance function and dynamics predictor, by fine-tuning a pre-trained representation on human collected video sequences. The final method is able to substantially outperform traditional robot learning baselines (e.g. 70% success v.s. 50% for behavior cloning on pick-place) on a suite of diverse real-world manipulation tasks. It can also generalize to novel objects, without using any robot demonstrations during train time.

@article{wang2023manipulate,

title={Manipulate by Seeing: Creating Manipulation Controllers from Pre-Trained Representations},

author={Wang, Jianren and Dasari, Sudeep and Srirama, Mohan Kumar and Tulsiani, Shubham and Gupta, Abhinav},

journal={ICCV},

year={2023}

}

|

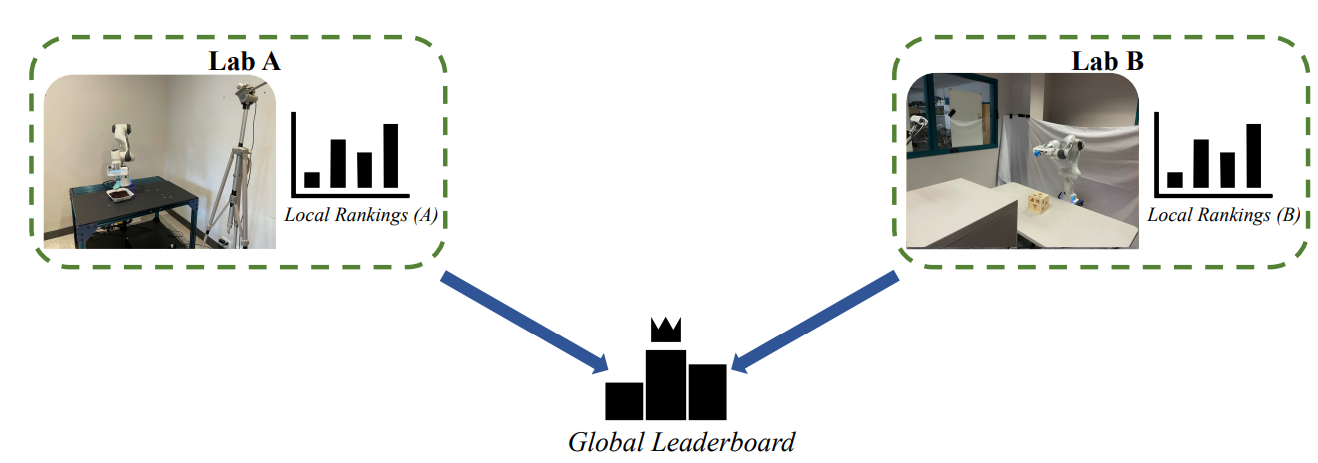

| RB2: Robotic Manipulation Benchmarking with a Twist Sudeep Dasari, Jianren Wang, Joyce Hong, Shikhar Bahl, Yixin Lin, Austin Wang Abitha Thankaraj, Karanbir Chahal, Berk Calli, Saurabh Gupta, David Held Lerrel Pinto, Deepak Pathak, Vikash Kumar, Abhinav Gupta 2021 Conference on Neural Information Processing Systems [Project Page] [Code] [Abstract] [Bibtex] Benchmarks offer a scientific way to compare algorithms using objective performance metrics. Good benchmarks have two features: (a) they should be widely useful for many research groups; (b) and they should produce reproducible findings. In robotic manipulation research, there is a trade-off between reproducibility and broad accessibility. If the benchmark is kept restrictive (fixed hardware, objects), the numbers are reproducible but the setup becomes less general. On the other hand, a benchmark could be a loose set of protocols (e.g. YCB object set) but the underlying variation in setups make the results non-reproducible. In this paper, we re-imagine benchmarking for robotic manipulation as state-of-the-art algorithmic implementations, alongside the usual set of tasks and experimental protocols. The added baseline implementations will provide a way to easily recreate SOTA numbers in a new local robotic setup, thus providing credible relative rankings between existing approaches and new work. However, these "local rankings" could vary between different setups. To resolve this issue, we build a mechanism for pooling experimental data between labs, and thus we establish a single global ranking for existing (and proposed) SOTA algorithms. Our benchmark, called Ranking-Based Robotics Benchmark (RB2), is evaluated on tasks that are inspired from clinically validated Southampton Hand Assessment Procedures. Our benchmark was run across two different labs and reveals several surprising findings. For example, extremely simple baselines like open-loop behavior cloning, outperform more complicated models (e.g. closed loop, RNN, Offline-RL, etc.) that are preferred by the field. We hope our fellow researchers will use \name to improve their research's quality and rigor.

@article{wang2021aril,

title={RB2: Robotic Manipulation Benchmarking with a Twist},

author={Dasari, Sudeep and Wang, Jianren and ... and Gupta, Saurabh and Held, David and Pinto, Lerrel and Pathak, Deepak and Kumar, Vikash and Gupta, Abhinav},

journal={Thirty-fifth Conference on Neural Information Processing Systems},

year={2021}

}

|

| Adversarially Robust Imitation Learning Jianren Wang, Ziwen Zhuang, Yuyang Wang, Hang Zhao 2021 Conference on Robot Learning [Project Page] [Code] [Abstract] [Bibtex] Modern imitation learning (IL) utilizes deep neural networks (DNNs) as function approximators to mimic the policy of the expert demonstrations. However, DNNs can be easily fooled by subtle noise added to the input, which is even non-detectable by humans. This makes the learned agent vulnerable to attacks, especially in IL where agents can struggle to recover from the errors. In such light, we propose a sound Adversarially Robust Imitation Learning (ARIL) method. In our setting, an agent and an adversary are trained alternatively. The former with adversarially attacked input at each timestep mimics the behavior of an online expert and the latter learns to add perturbations on the states by forcing the learned agent to fail on choosing the right decisions. We theoretically prove that ARIL can achieve adversarial robustness and evaluate ARIL on multiple benchmarks from DM Control Suite. The result reveals that our method (ARIL) achieves better robustness compare with other imitation learning methods under both sensory attack and physical attack.

@article{wang2021aril,

title={Adversarially Robust Imitation Learning},

author={Wang, Jianren and Zhuang, Ziwen and Wang, Yuyang and Zhao, Hang},

journal={CORL},

year={2021}

}

|

| Wanderlust: Online Continual Object Detection in the Real World Jianren Wang, Xin Wang, Yue Shang-Guan, Abhinav Gupta 2021 International Conference on Computer Vision [Project Page] [Code] [Abstract] [Bibtex] Online continual learning from data streams in dynamic environments is a critical direction in the computer vision field. However, realistic benchmarks and fundamental studies in this line are still missing. To bridge the gap, we present a new online continual object detection benchmark with an egocentric video dataset, Objects Around Krishna (OAK). OAK adopts the KrishnaCAM videos, an ego-centric video stream collected over nine months by a graduate student. OAK provides exhaustive bounding box annotations of 80 video snippets (~17.5 hours) for 105 object categories in outdoor scenes. The emergence of new object categories in our benchmark follows a pattern similar to what a single person might see in their day-to-day life. The dataset also captures the natural distribution shifts as the person travels to different places. These egocentric long running videos provide a realistic playground for continual learning algorithms, especially in online embodied settings. We also introduce new evaluation metrics to evaluate the model performance and catastrophic forgetting and provide baseline studies for online continual object detection. We believe this benchmark will pose new exciting challenges for learning from non-stationary data in continual learning.

@article{wang2021wanderlust,

title={Wanderlust: Online Continual Object Detection in the Real World},

author={Wang, Jianren and Wang, Xin and Shang-Guan, Yue and Gupta, Abhinav},

journal={ICCV},

year={2021}

}

|

| PanoNet3D: Combining Semantic and Geometric Understanding for LiDARPoint Cloud Detection Xia Chen, Jianren Wang, David Held, Martial Hebert 2020 International Virtual Conference on 3D Vision [Project Page] [Code] [Abstract] [Bibtex] Visual data in autonomous driving perception, such as camera image and LiDAR point cloud, can be interpreted as a mixture of two aspects: semantic feature and geometric structure. Semantics come from the appearance and context of objects to the sensor, while geometric structure is the actual 3D shape of point clouds. Most detectors on LiDAR point clouds focus only on analyzing the geometric structure of objects in real 3D space. Unlike previous works, we propose to learn both semantic feature and geometric structure via a unified multi-view framework. Our method exploits the nature of LiDAR scans -- 2D range images, and applies well-studied 2D convolutions to extract semantic features. By fusing semantic and geometric features, our method outperforms state-of-the-art approaches in all categories by a large margin. The methodology of combining semantic and geometric features provides a unique perspective of looking at the problems in real-world 3D point cloud detection.

@inproceedings{xia20panonet3d,

Author = {Chen, Xia and Wang, Jianren and Held, David and Hebert, Martial},

Title = {PanoNet3D: Combining Semantic and Geometric Understanding

for LiDARPoint Cloud Detection},

Booktitle = {3DV},

Year = {2020}

}

|

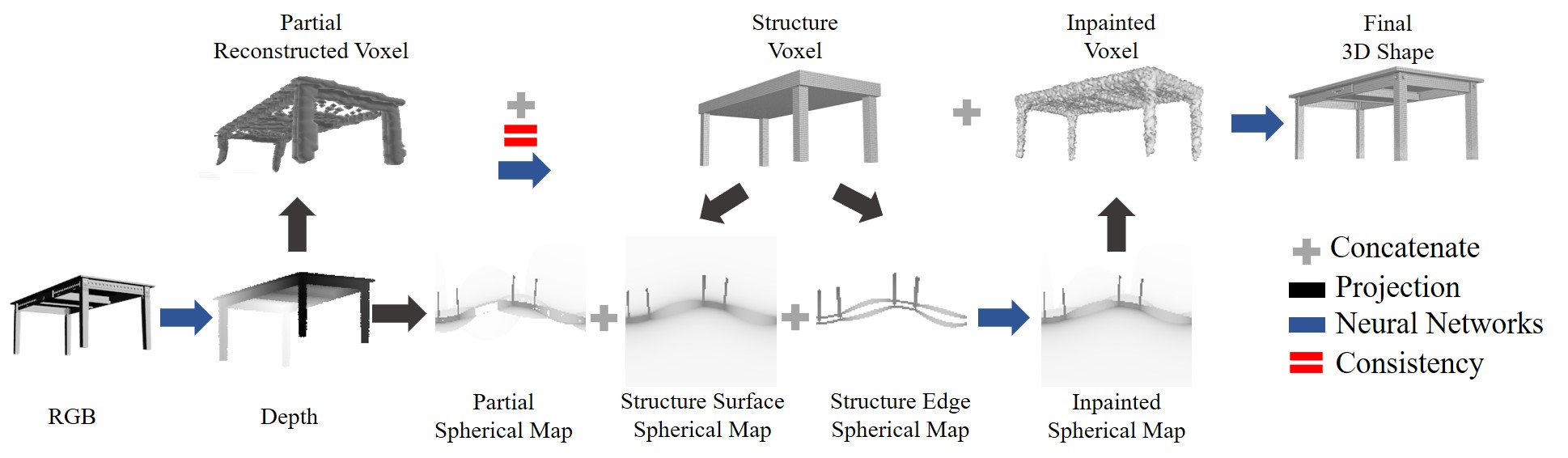

| GSIR: Generalizable 3D Shape Interpretation and Reconstruction Jianren Wang, Zhaoyuan Fang 2020 The European Conference on Computer Vision [Project Page] [Code] [Abstract] [Bibtex] 3D shape interpretation and reconstruction are closely related to each other but have long been studied separately and often end up with priors that are highly biased towards the training classes. In this paper, we present an algorithm, Generalizable 3D Shape Interpretation and Reconstruction (GSIR), designed to jointly learn these two tasks to capture generic, class-agnostic shape priors for a better understanding of 3D geometry. We propose to recover 3D shape structures as cuboids from partial reconstruction and use the predicted structures to further guide full 3D reconstruction. The unified framework is trained simultaneously offline to learn a generic notion and can be fine-tuned online for specific objects without any annotations. Extensive experiments on both synthetic and real data demonstrate that introducing 3D shape interpretation improves the performance of single image 3D reconstruction and vice versa, achieving the state-of-the-art performance on both tasks for objects in both seen and unseen categories.

@inproceedings{wang2020gsir,

title={GSIR: Generalizable 3D Shape Interpretation and Reconstruction},

author={Wang, Jianren and Fang, Zhaoyuan},

booktitle={European Conference on Computer Vision},

pages={498--514},

year={2020},

organization={Springer}

}

|

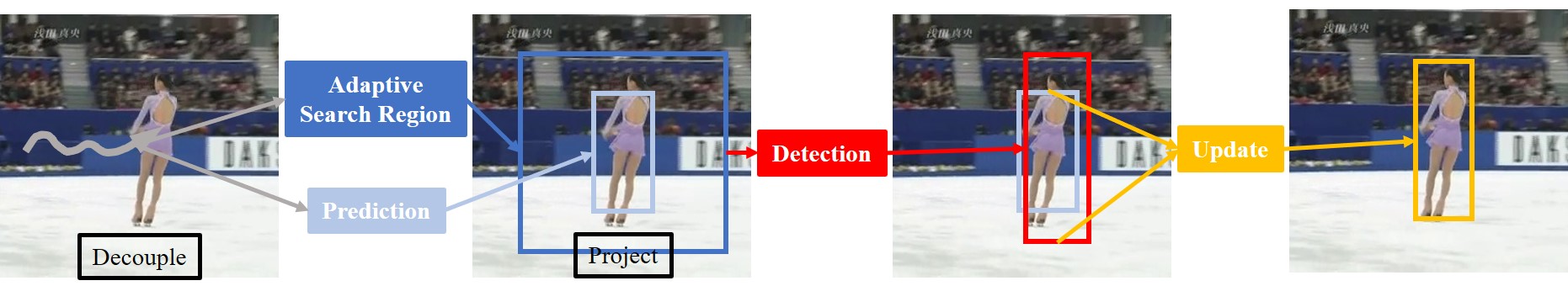

| Motion Prediction in Visual Object Tracking Jianren Wang*, Yihui He*(* indicates equal contribution) 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems |

| Deep Mixture Density Network for Object Detection under Occlusion Yihui He*, Jianren Wang*(* indicates equal contribution) 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems |

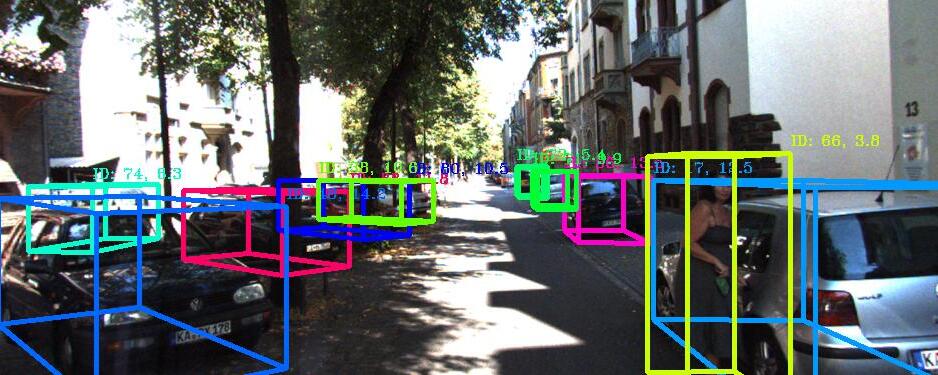

| 3D Multi-Object Tracking: A Baseline and New Evaluation Metrics Xinshuo Weng, Jianren Wang, David Held, Kris M. Kitani 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems [Project Page] [Code] [Abstract] [Bibtex] 3D multi-object tracking (MOT) is an essential component for many applications such as autonomous driving and assistive robotics. Recent work on 3D MOT focuses on developing accurate systems giving less attention to practical considerations such as computational cost and system complexity. In contrast, this work proposes a simple real-time 3D MOT system. Our system first obtains 3D detections from a LiDAR point cloud. Then, a straightforward combination of a 3D Kalman filter and the Hungarian algorithm is used for state estimation and data association. Additionally, 3D MOT datasets such as KITTI evaluate MOT methods in the 2D space and standardized 3D MOT evaluation tools are missing for a fair comparison of 3D MOT methods. Therefore, we propose a new 3D MOT evaluation tool along with three new metrics to comprehensively evaluate 3D MOT methods. We show that, although our system employs a combination of classical MOT modules, we achieve state-of-the-art 3D MOT performance on two 3D MOT benchmarks (KITTI and nuScenes). Surprisingly, although our system does not use any 2D data as inputs, we achieve competitive performance on the KITTI 2D MOT leaderboard. Our proposed system runs at a rate of 207.4 FPS on the KITTI dataset, achieving the fastest speed among all modern MOT systems.

@article{Weng2020_AB3DMOT,

author = {Weng, Xinshuo and Wang, Jianren and Held, David and Kitani, Kris},

journal = {IROS},

title = {3D Multi-Object Tracking: A Baseline and New Evaluation Metrics},

year = {2020}

}

|

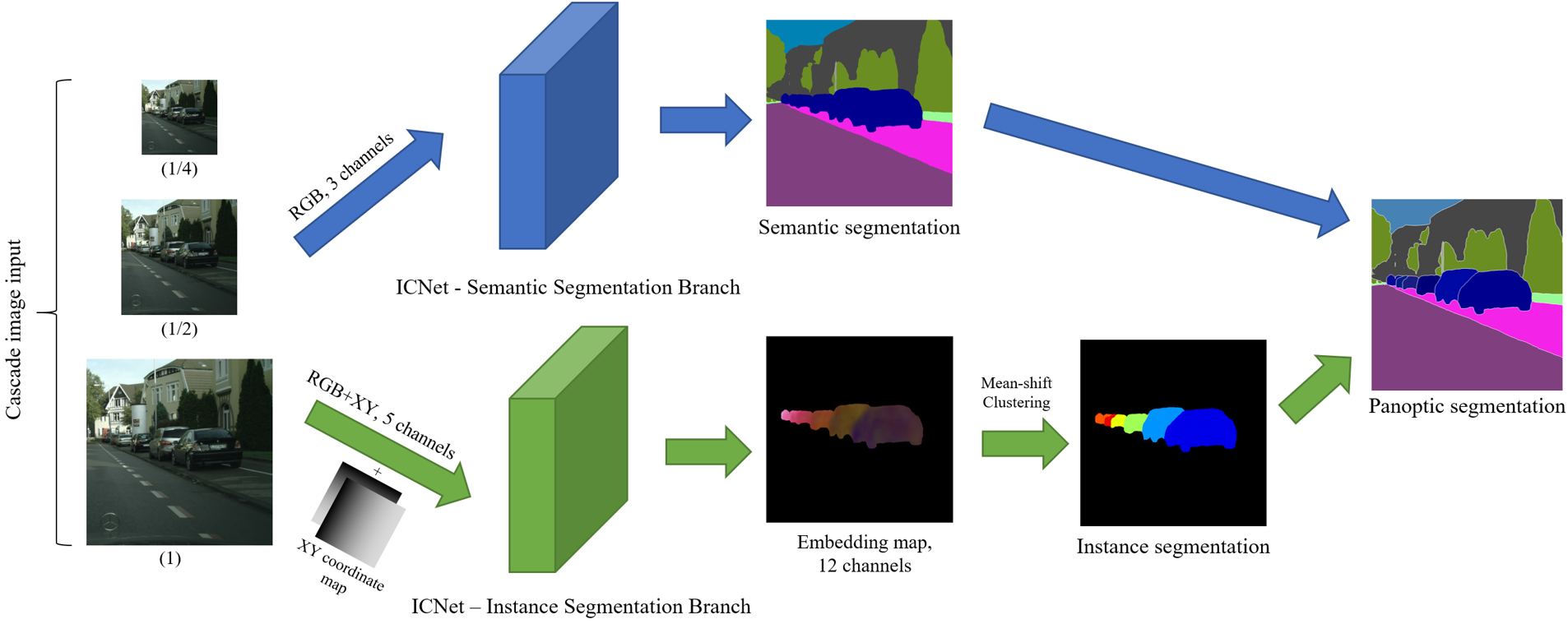

| PanoNet: Real-time Panoptic Segmentation through Position-Sensitive Feature Embedding Xia Chen, Jianren Wang, Martial Hebert |

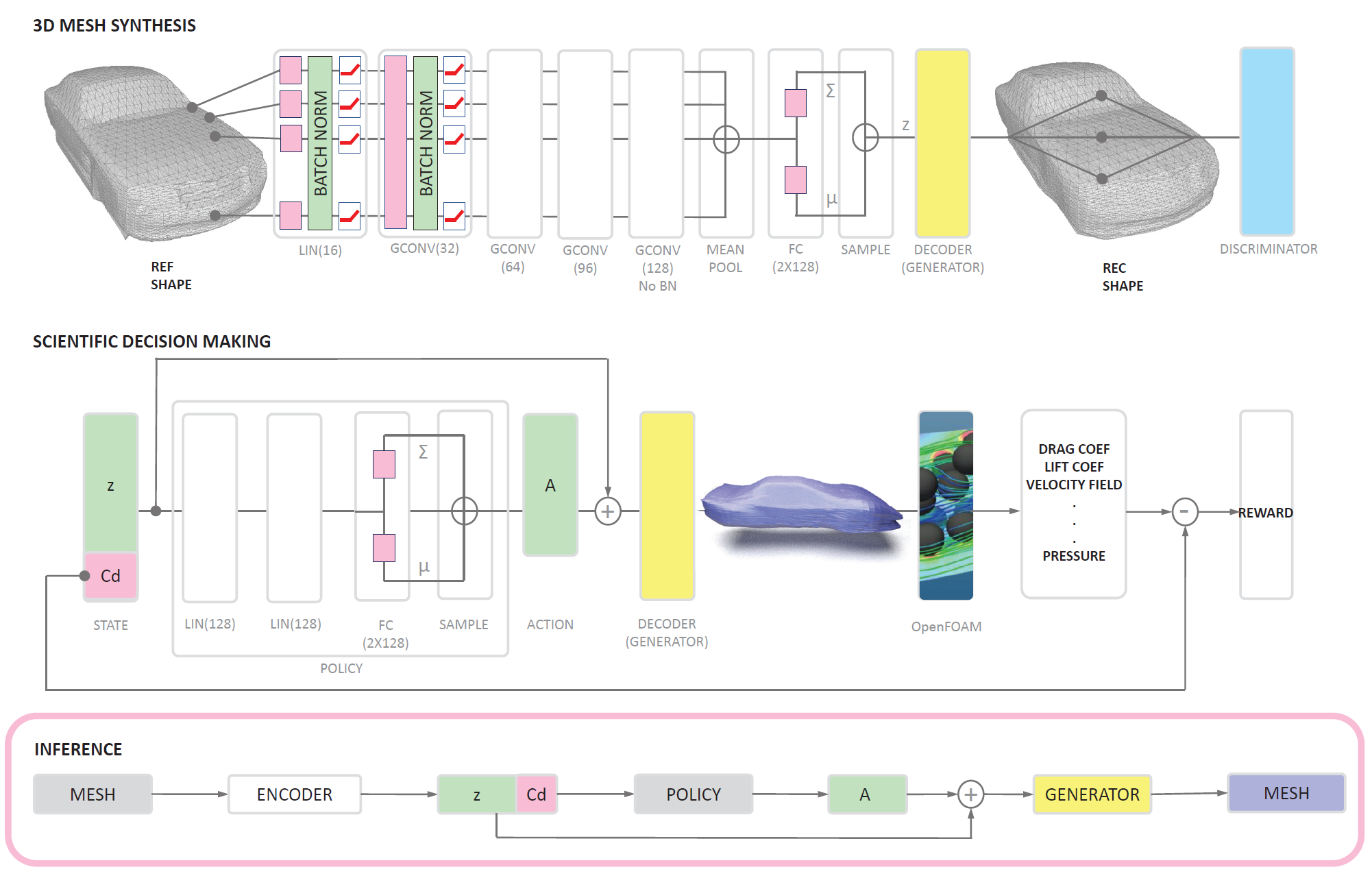

| Physics-Aware 3D Mesh Synthesis Jianren Wang, Yihui He 2019 International Conference on 3D Vision |

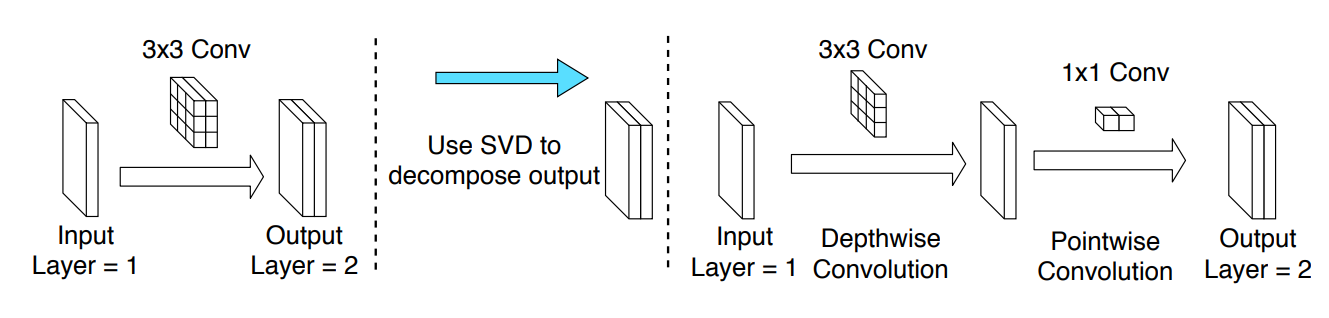

| Depth-wise Decomposition for Accelerating Separable Convolutions in Efficient Convolutional Neural Networks Yihui He*, Jianing Qian*, Jianren Wang (* indicates equal contribution) 2019 IEEE Conference on Computer Vision and Pattern Recognition Workshop |

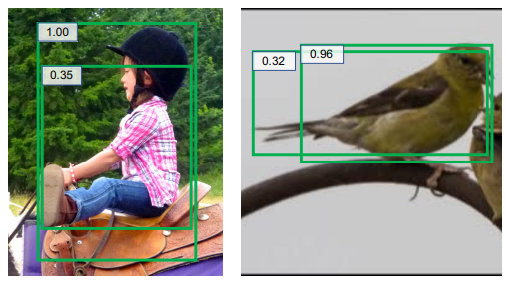

| Bounding Box Regression with Uncertainty for Accurate Object Detection Yihui He, Chenchen Zhu, Jianren Wang, Marios Savvides, Xiangyu Zhang 2019 IEEE Conference on Computer Vision and Pattern Recognition [Code] |

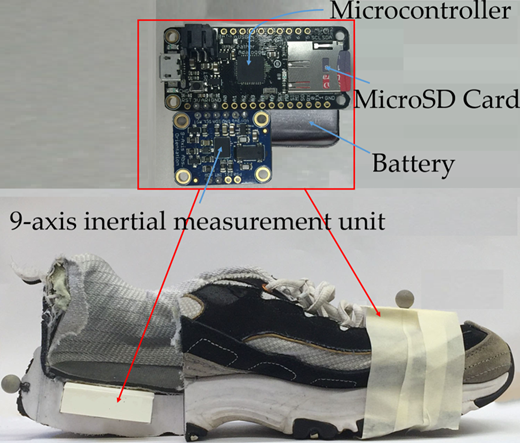

| Vertical Jump Height Estimation Algorithm Based on Takeoff and Landing Identification Via Foot-Worn Inertial Sensing Jianren Wang, Junkai Xu, Peter B Shull 2018 Journal of biomechanical engineering |

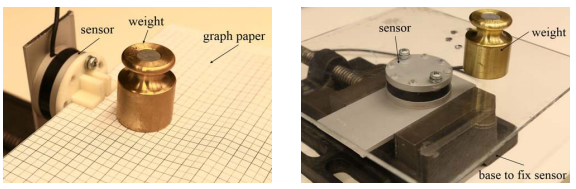

| Integration of a Low-Cost Three-Axis Sensor for Robot Force Control Shuyang Chen, Jianren Wang, Peter Kazanzides 2018 Second IEEE International Conference on Robotic Computing |

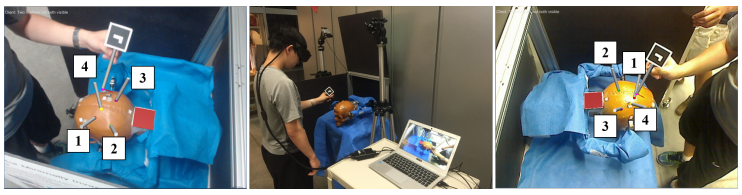

| Prioritization and static error compensation for multi-camera collaborative tracking in augmented reality Jianren Wang, Long Qian, Ehsan Azimi, Peter Kazanzides 2017 IEEE Virtual Reality |

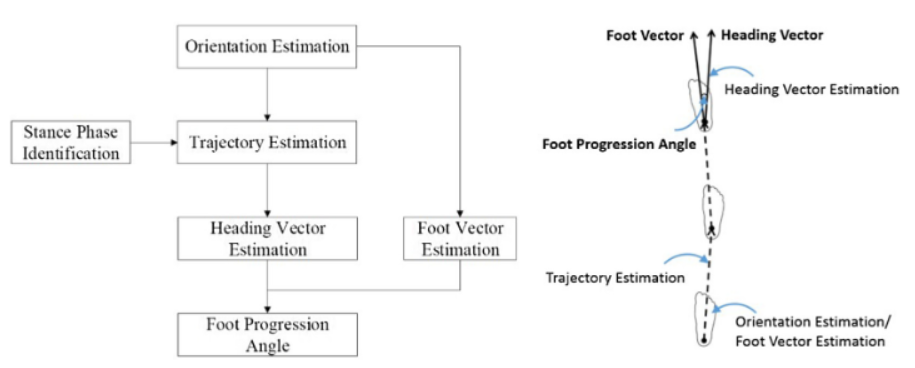

| Validation of a smart shoe for estimating foot progression angle during walking gait Haisheng Xia, Junkai Xu, Jianren Wang, Michael A Hunt, Peter B Shull 2017 Journal of biomechanics |